All projects

Arts & Culture

Design tool for generating typographic posters and animations

Arts & Culture

Interactive timeline exhibit for a city archive

Art

Generative audio-visual artwork that fuses color and motion of multiple videos

Arts & Culture

Interactive archive of the activities of a Master’s programme

Art

Generative animations based on continuous application of a filter kernel

Arts & Culture

Data analysis and visualizations of one year worth of photos

Public Interest

User-driven online propaganda tool on the topic of net neutrality

Applied

Tools for analyzing, visualizing, and comparing formal characteristics of movies

Applied

Finding interesting configurations for a generative artwork through data analysis

Commercial

Type foundry website with integrated online store

Experiment

Increasing the chances of finding mushrooms through geospatial data analysis

Arts & Culture

macOS screensaver for collectors of Orb (lite) NFTs by Harm van den Dorpel

Arts & Culture

Web3 site for minting NFTs continuously generated by a live art installation

Commercial

Website that lets users virtually place the product into their physical surroundings

Art

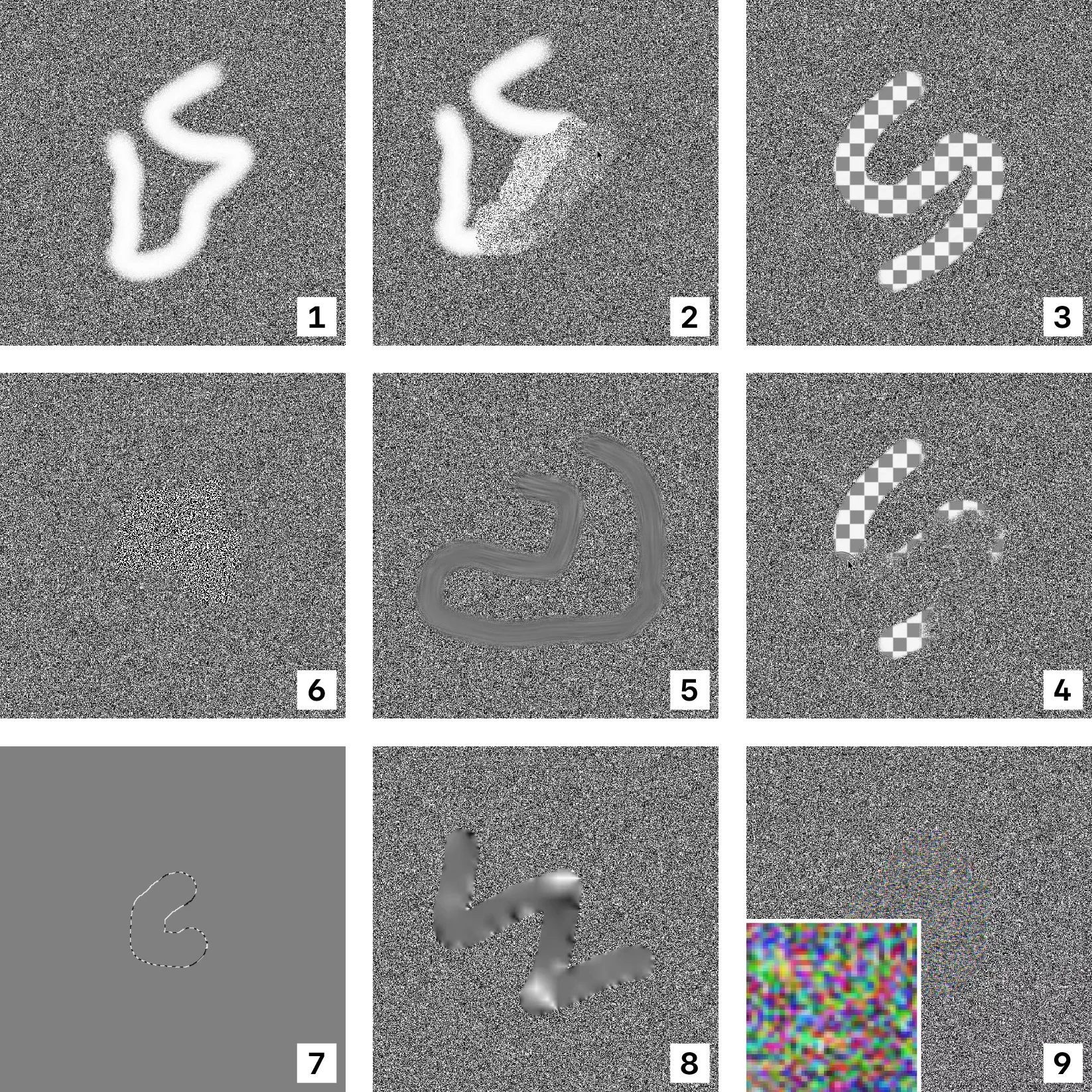

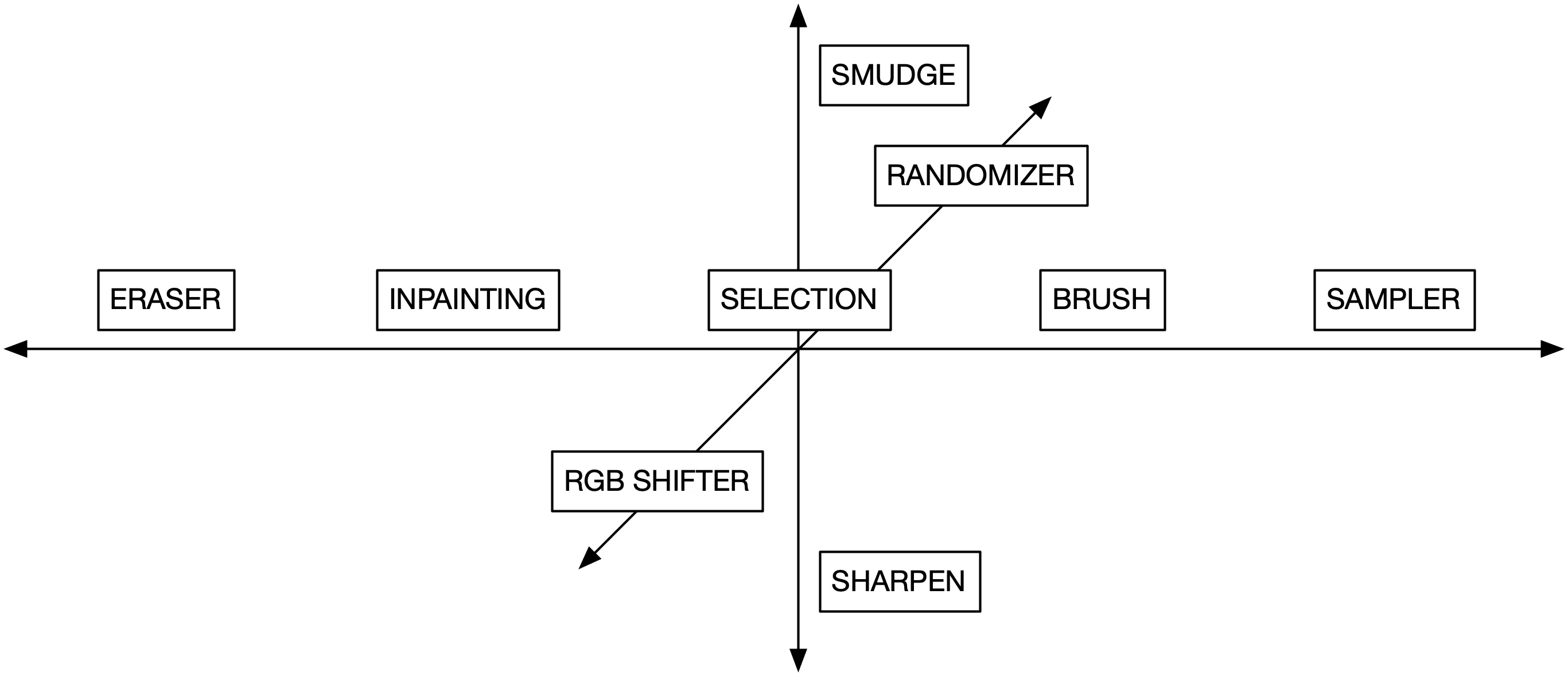

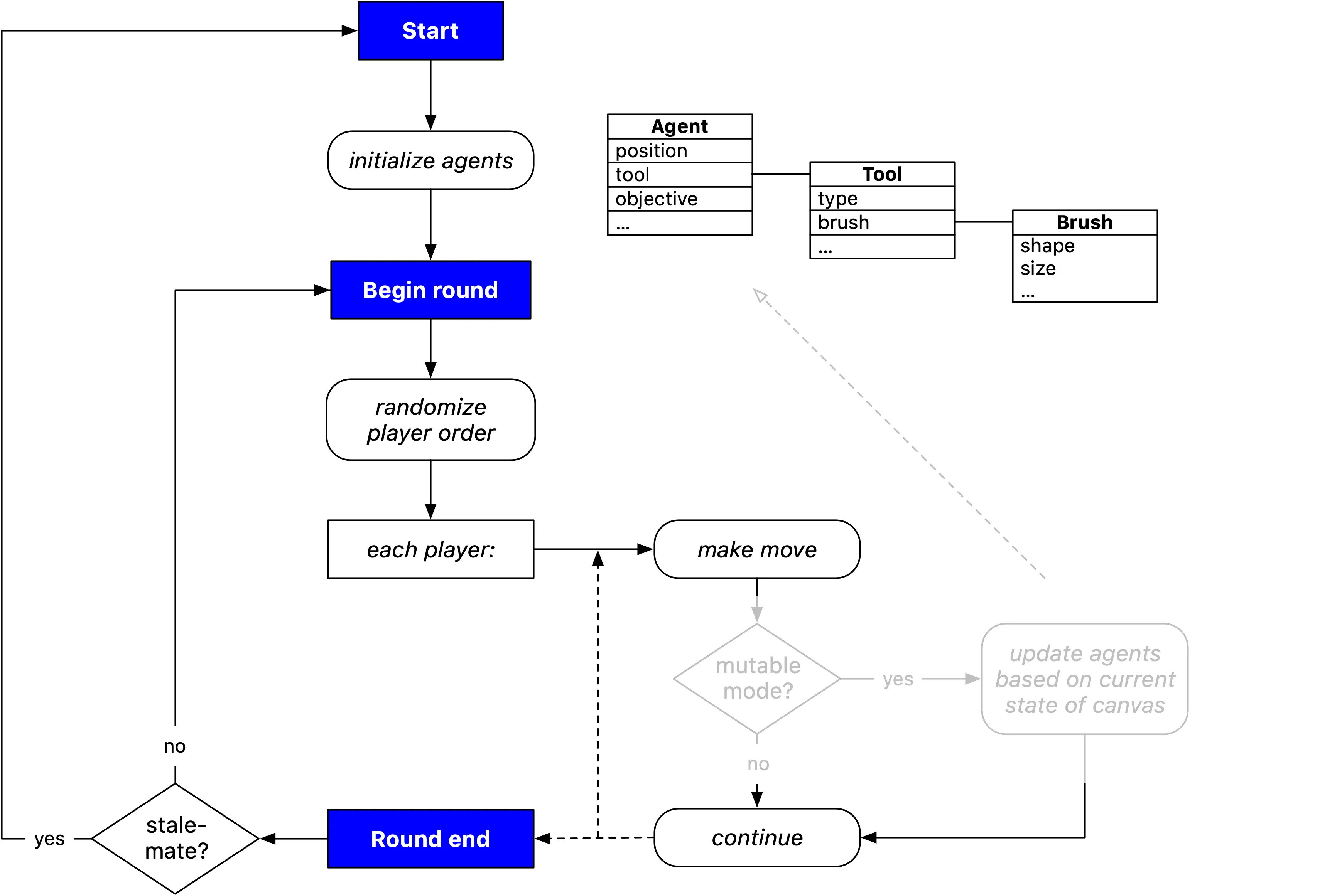

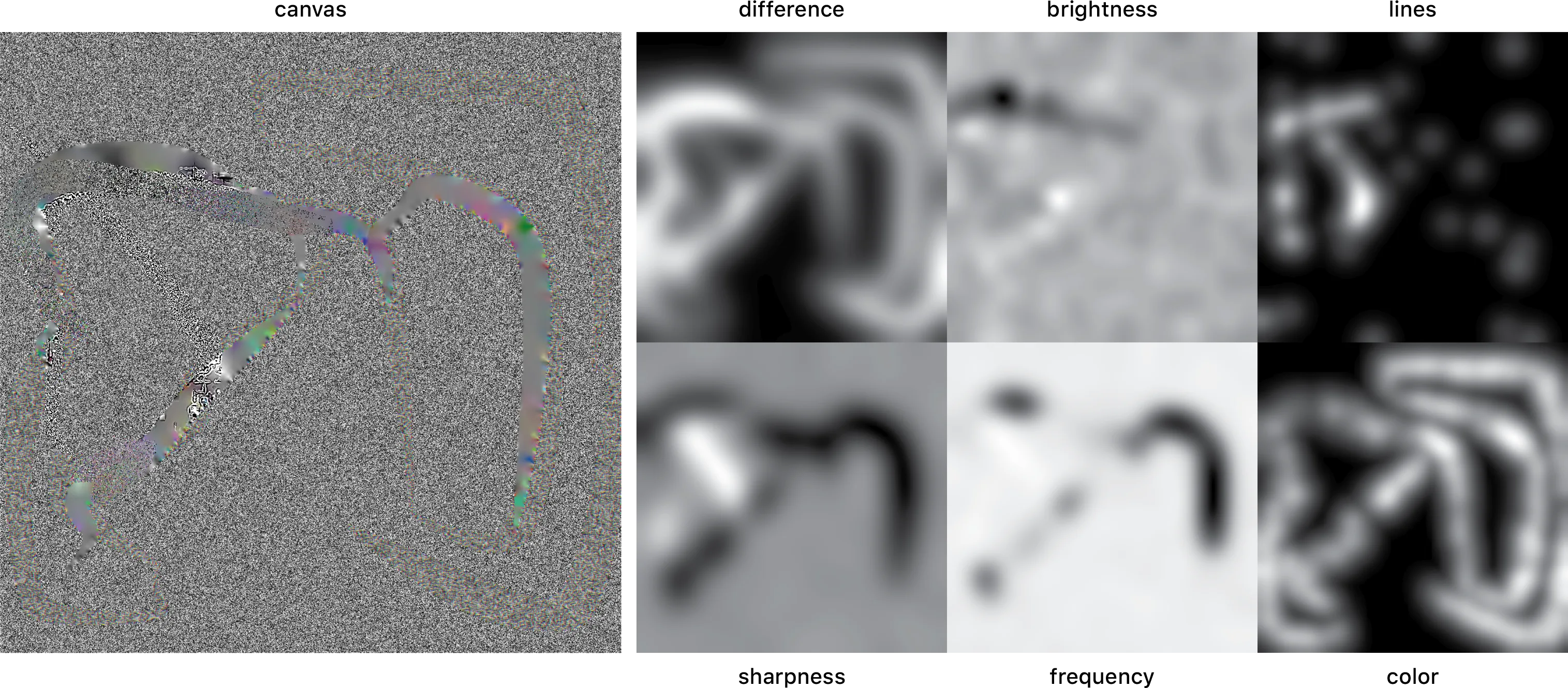

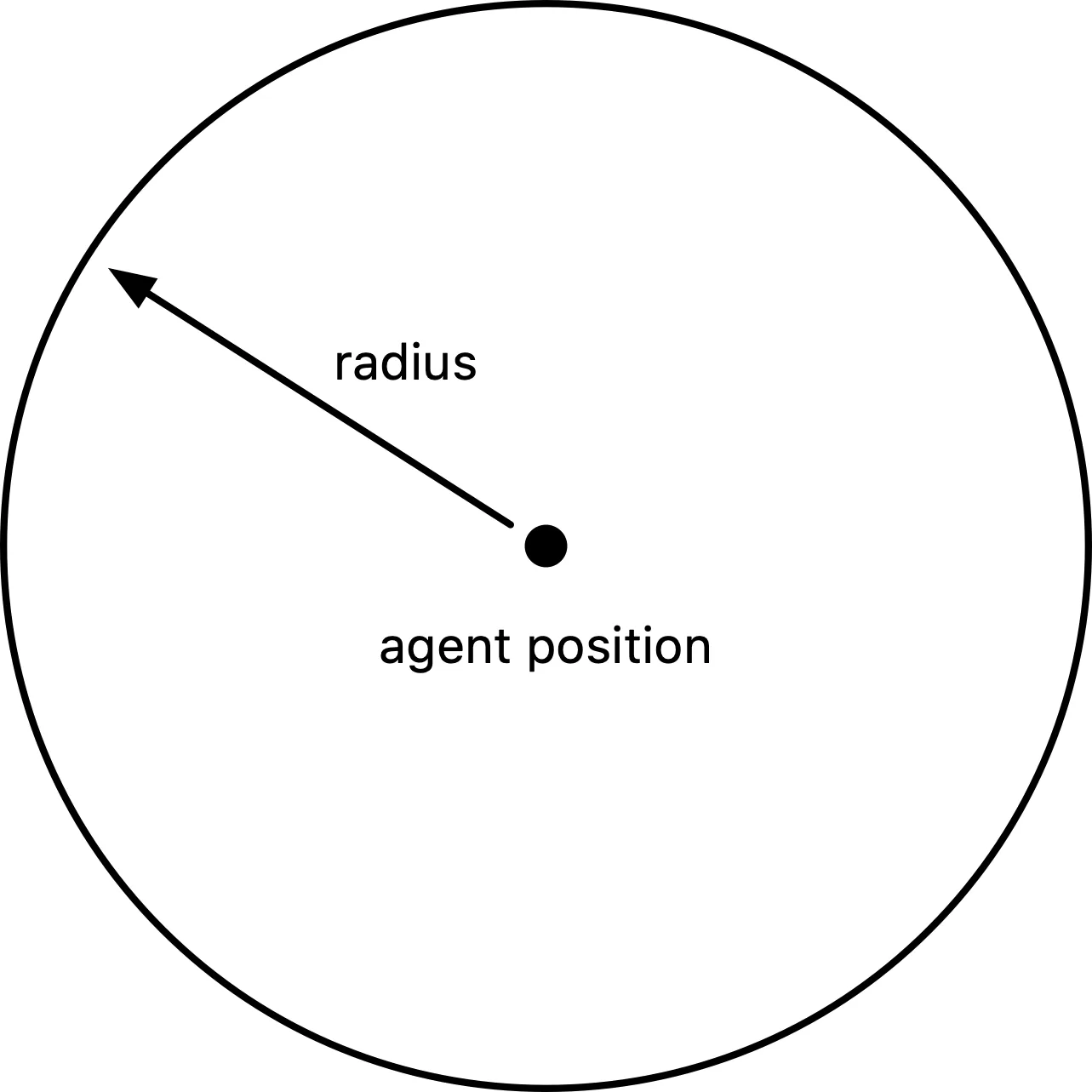

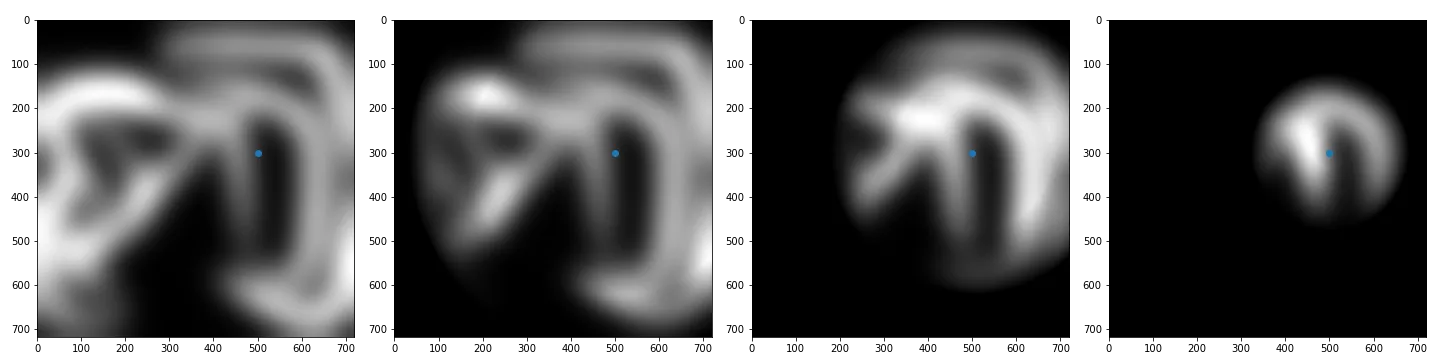

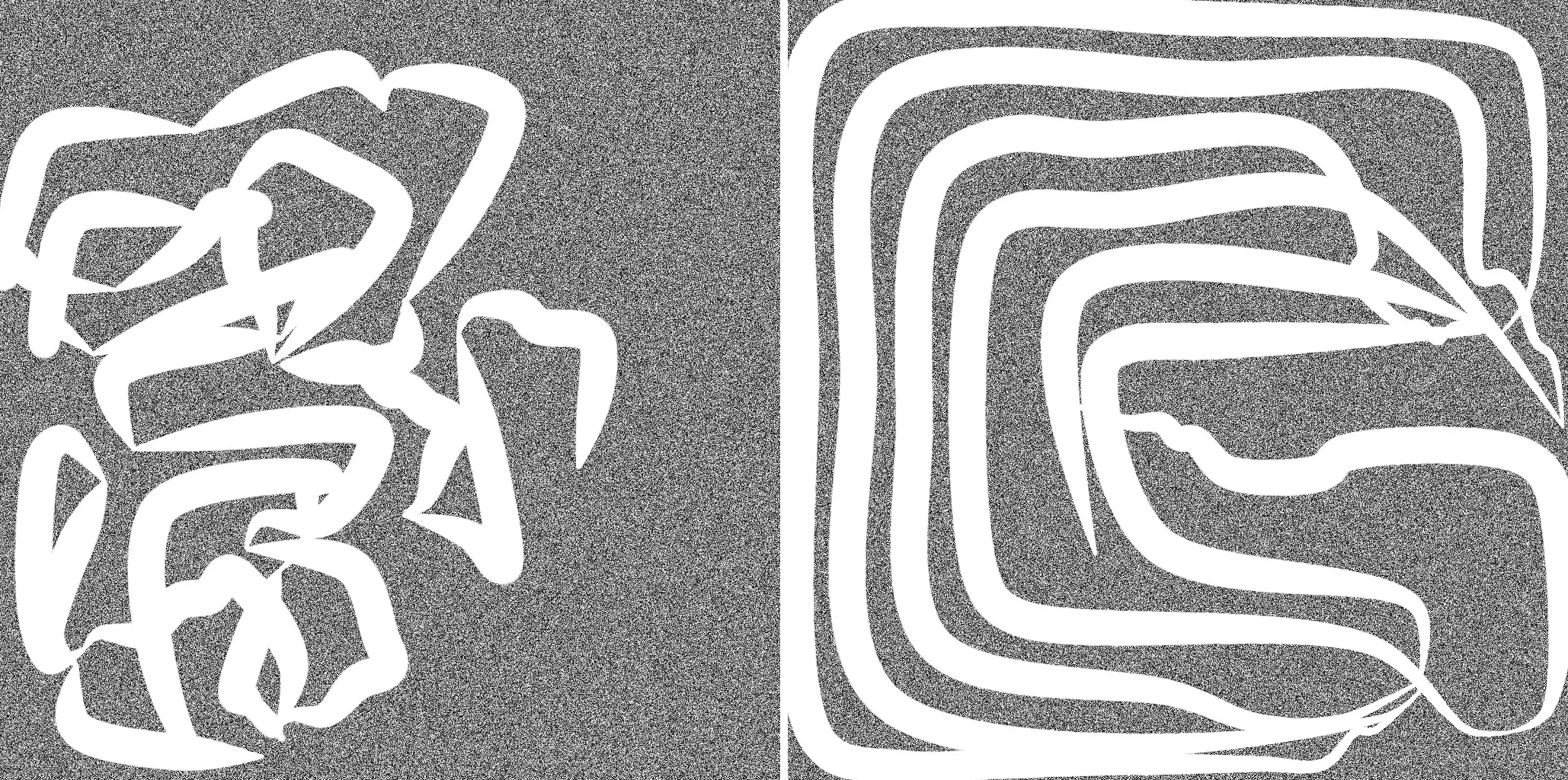

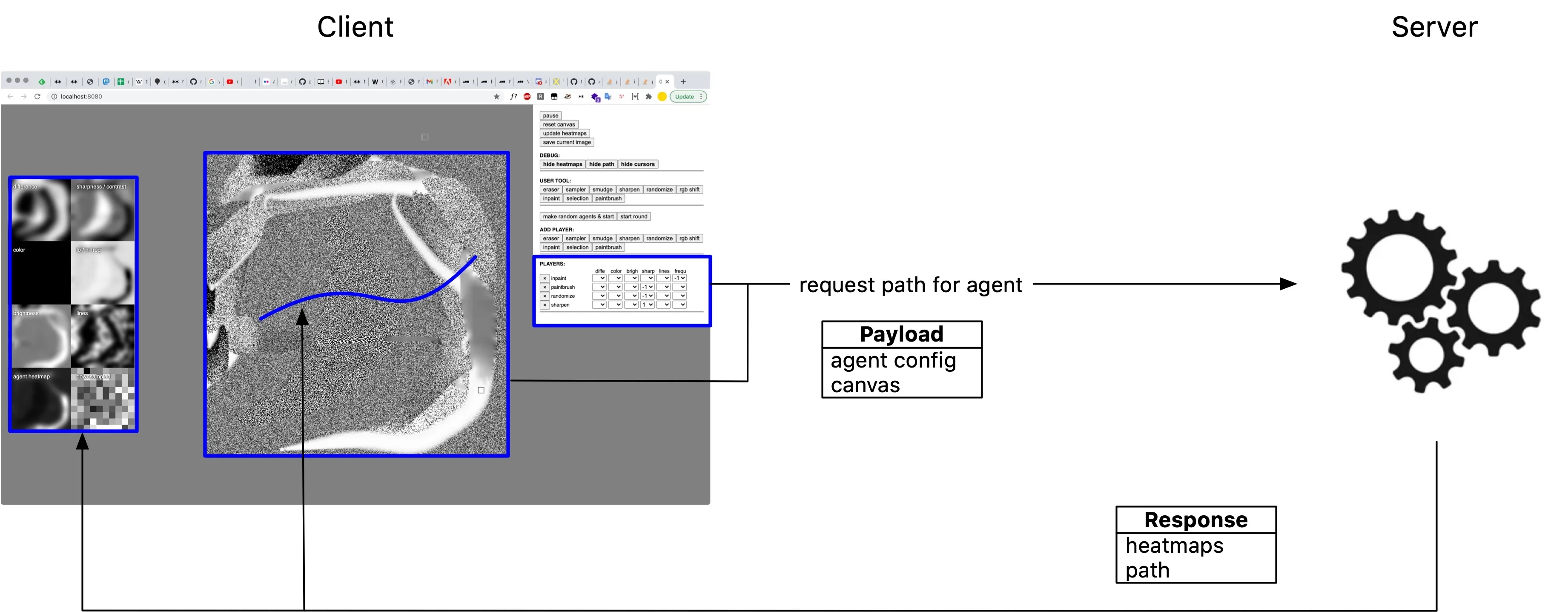

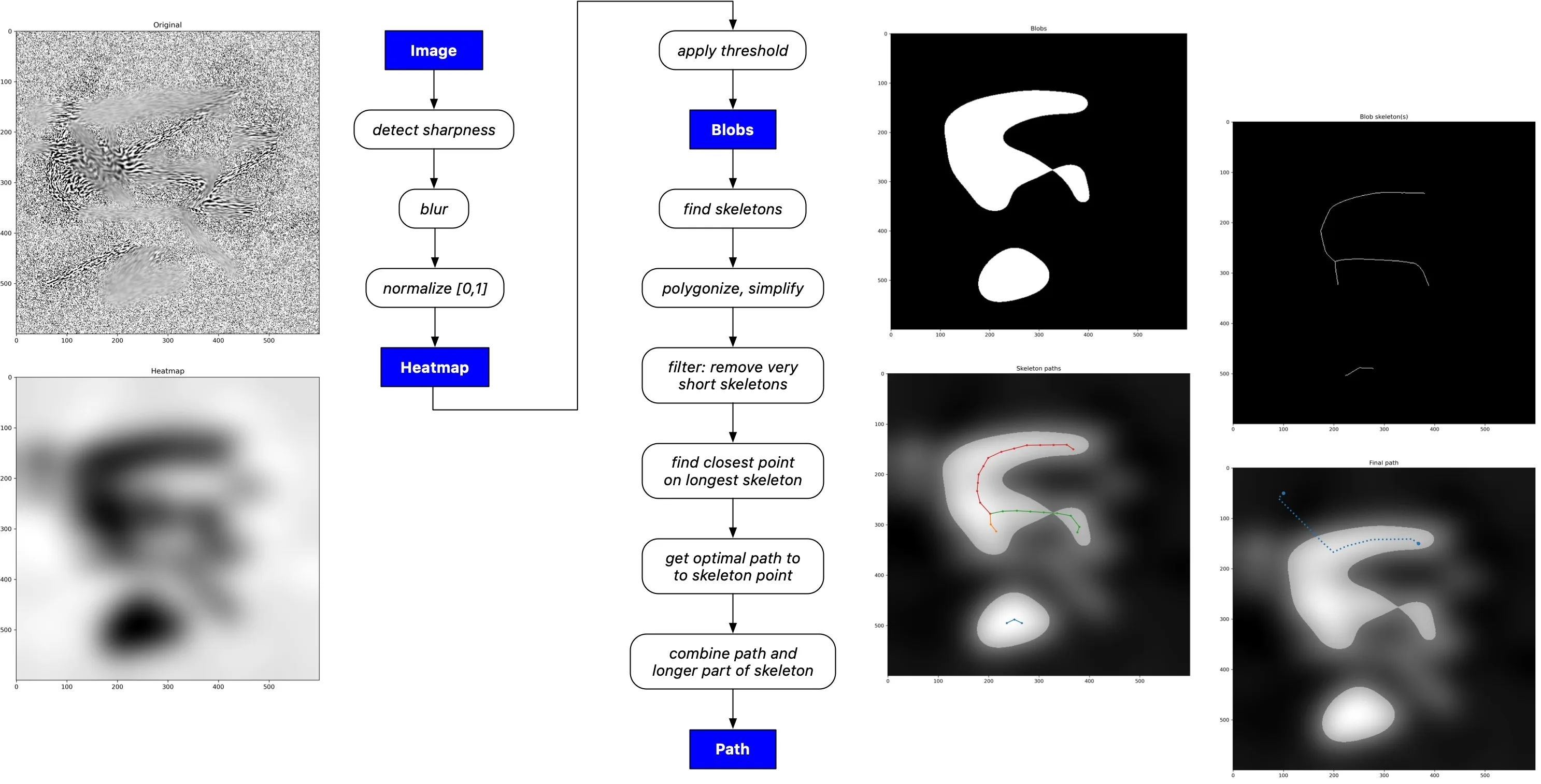

0..1

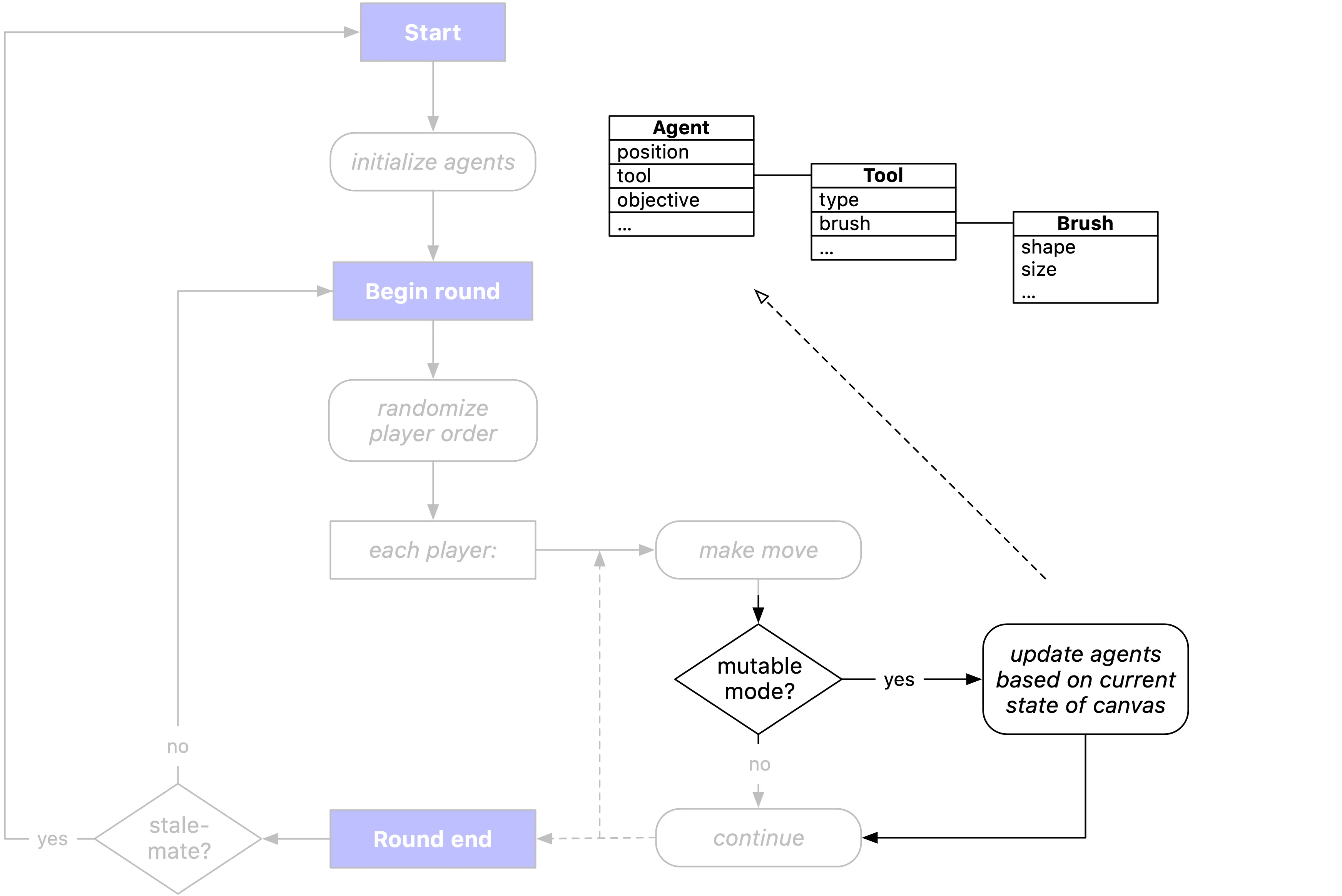

Self-playing simulation game using the language and mechanics of image editing

Applied

Finger tracking tool

Experiment

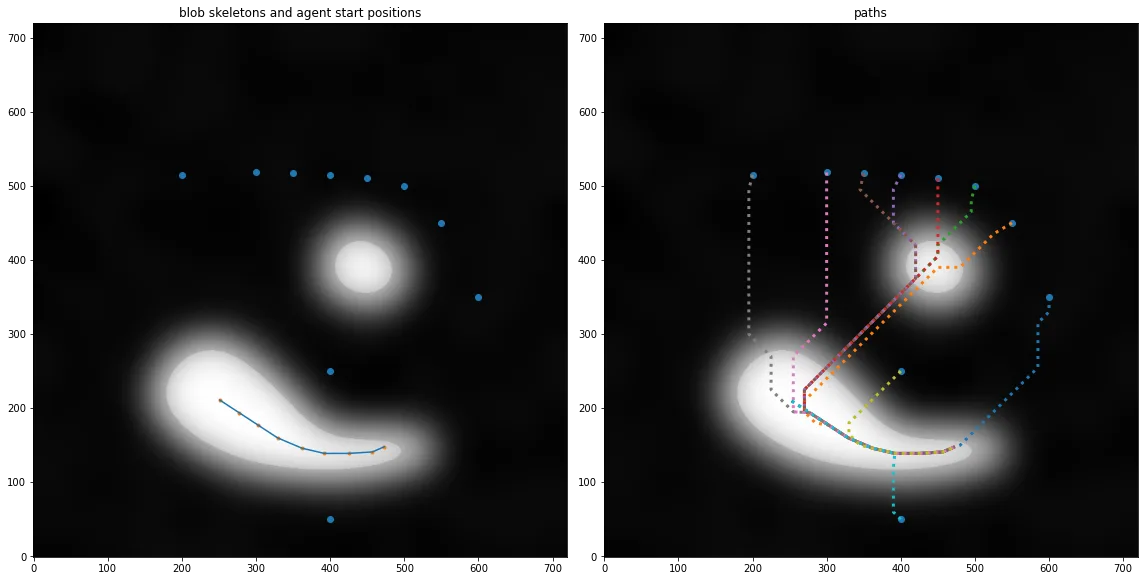

Simulating blobs of fluids with particles

Experiment

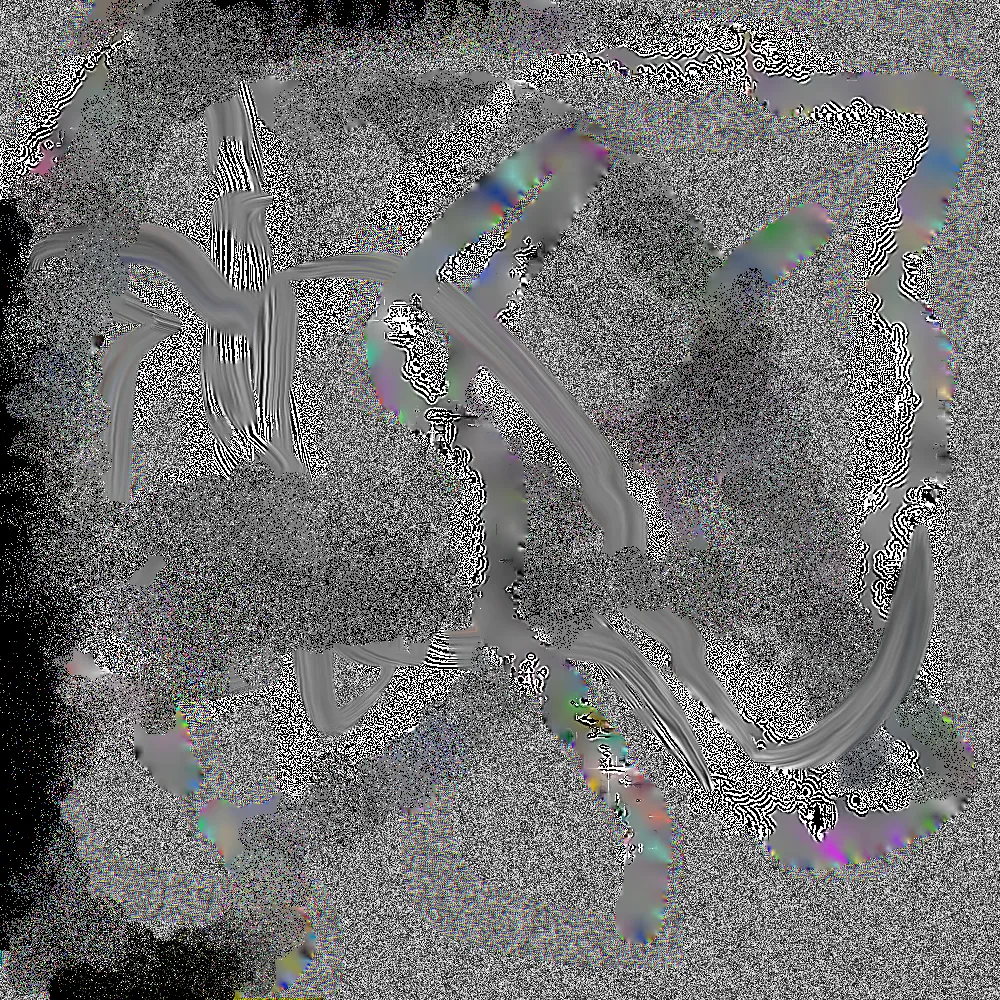

Force-based simulation of bands of particles

Experiment

Modified JPEG encoder for generating glitchy image effects

Art

A website that keeps eye contact

Art

A website that is all its past versions

Art

A website that lets you leave something behind for the next visitor

Art

A website that is just its analytics report

Commercial

Point-of-sale software for opticians to help customers choose the right lenses

Experiment

Mapping line drawings onto street networks

Commercial

Bespoke website for the release of the Logical typeface by Edgar Walthert

Misc

Proof-of-concept for an alternative, more powerful Are.na client

Experiment

GPS trace replay tool

Misc

Browser extension that collects texts of how designers describe themselves

Arts & Culture

Interactive animation for a music festival announcement page

Commercial

Website for a graphic design studio

Arts & Culture

Independent music publishing and streaming platform

Arts & Culture

Parametric typeface generator tool as part of a visual identity system

Public Interest

Graphical modelling tool for describing attack scenarios

Applied

Visualization showing how a text document got written and edited over time

Experiment

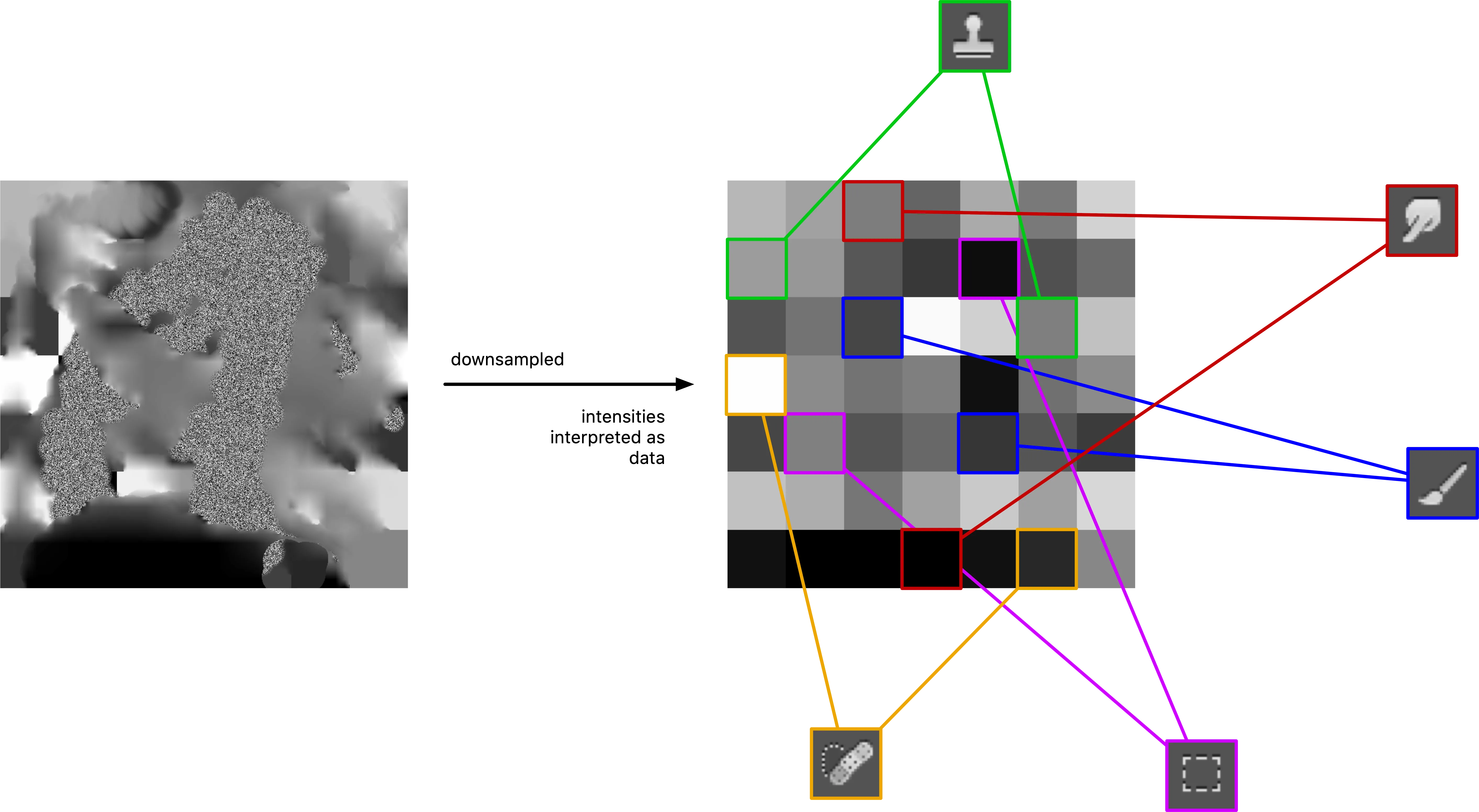

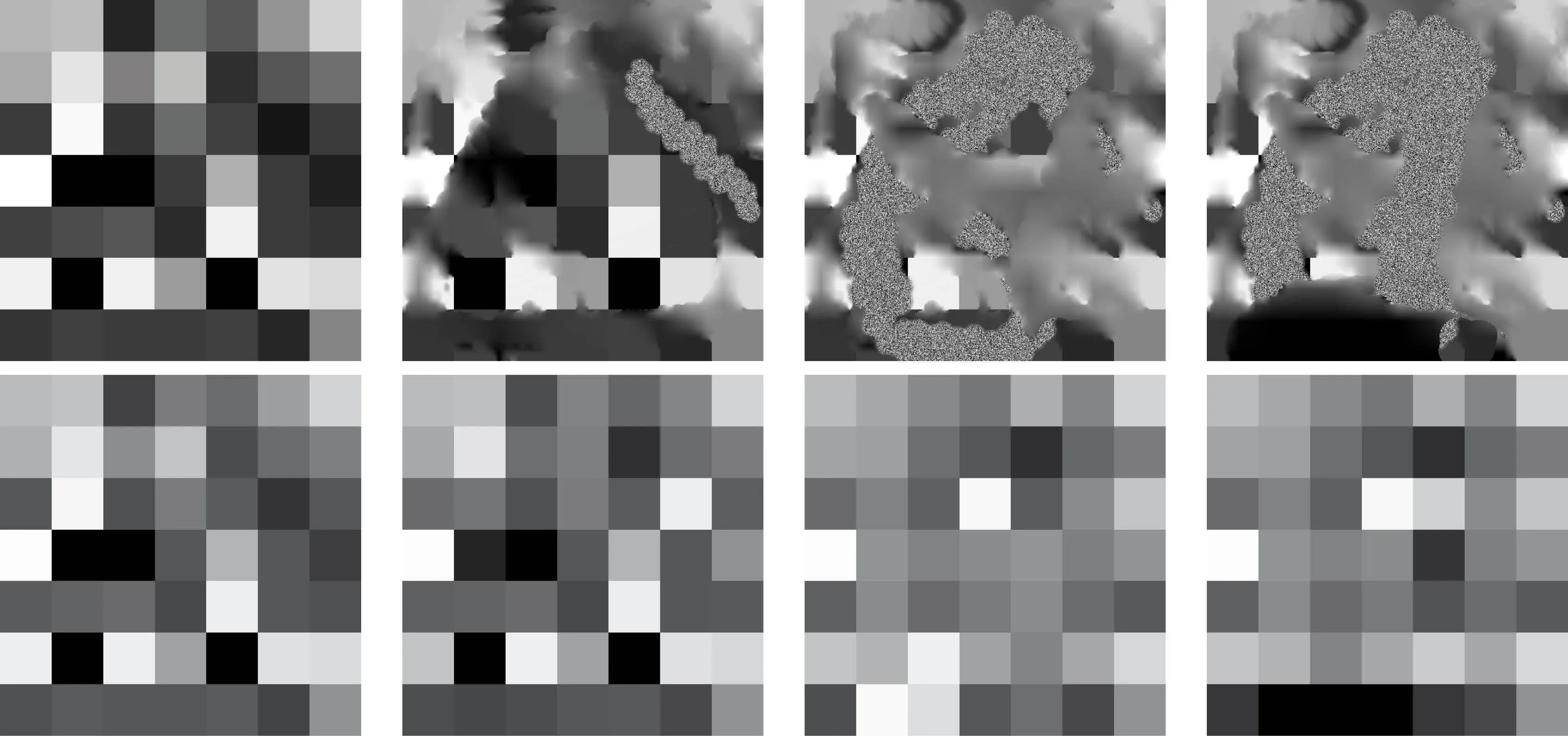

Experiments with movie image data