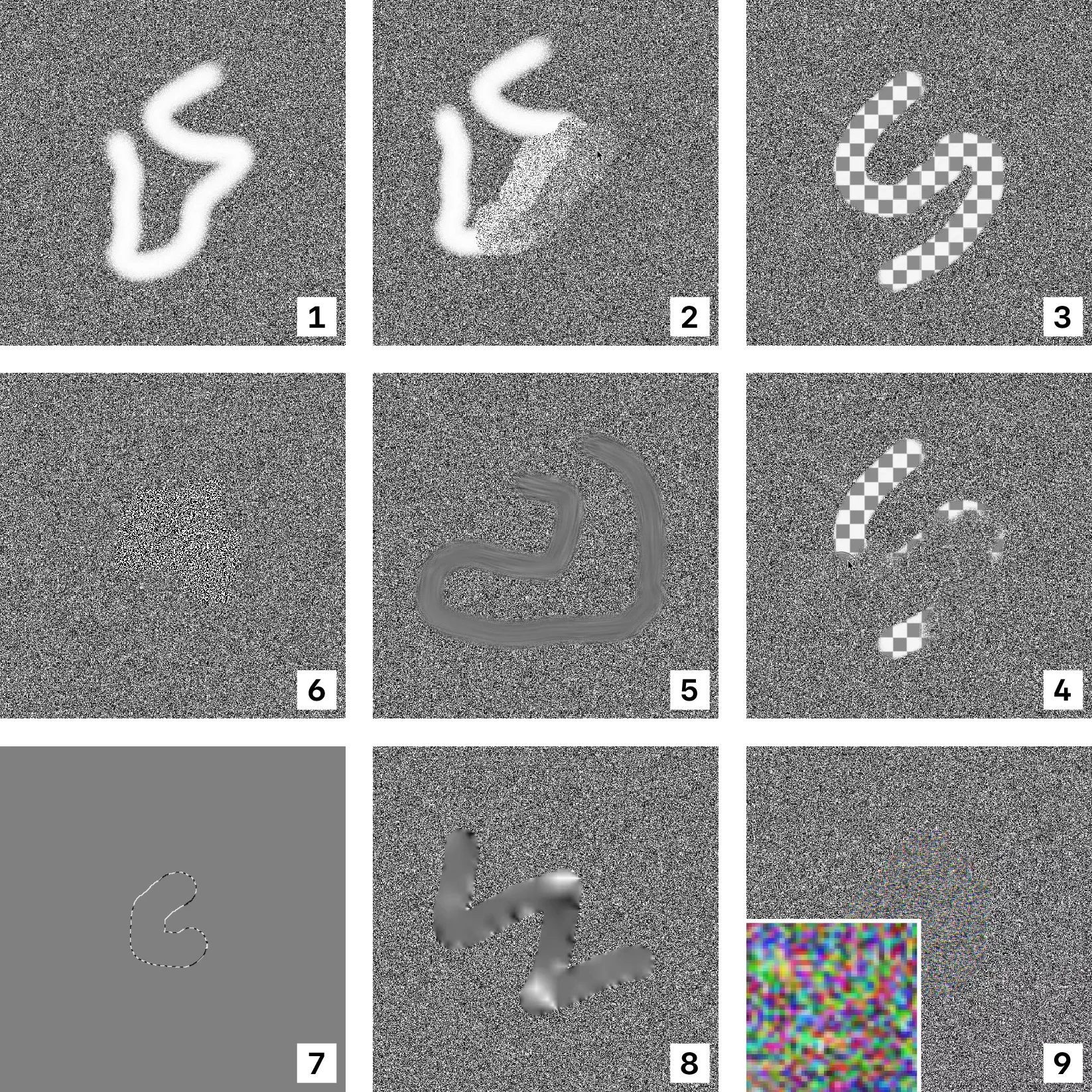

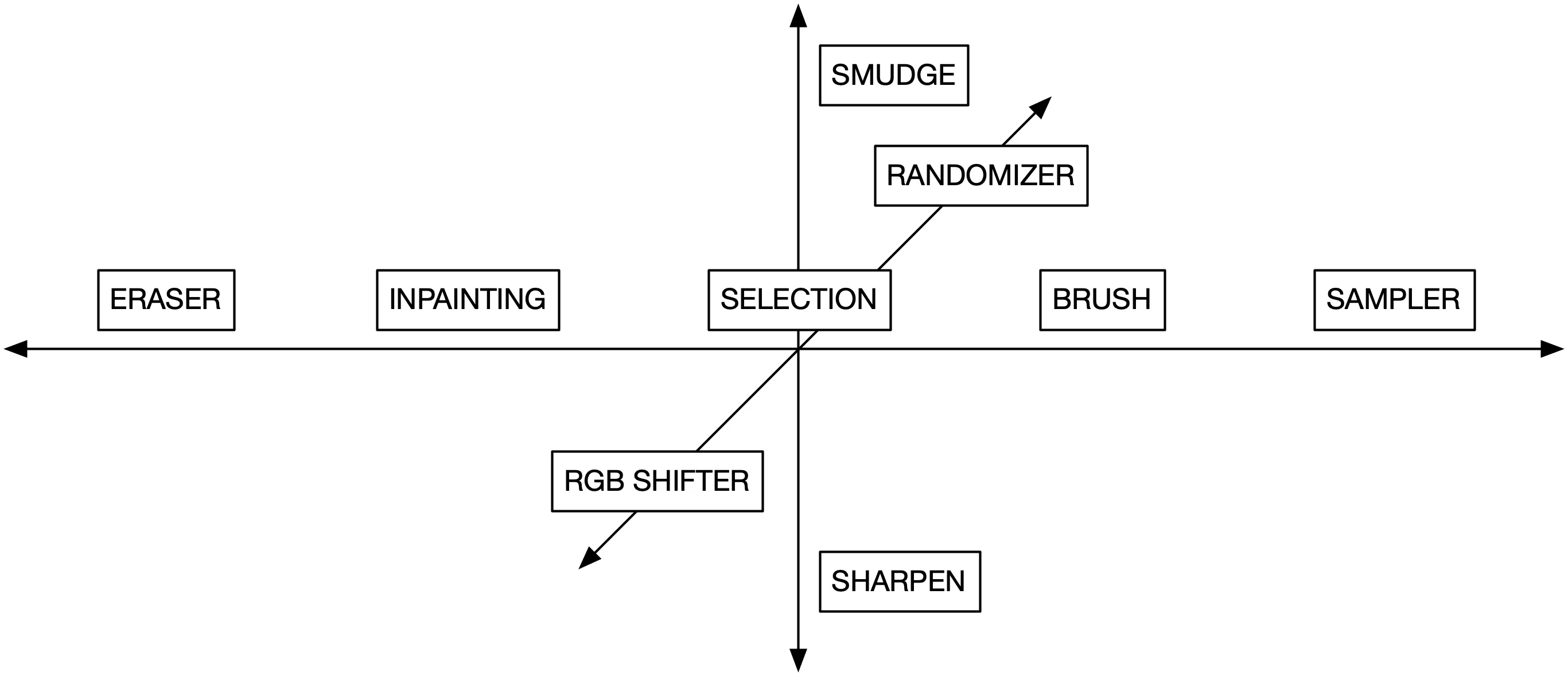

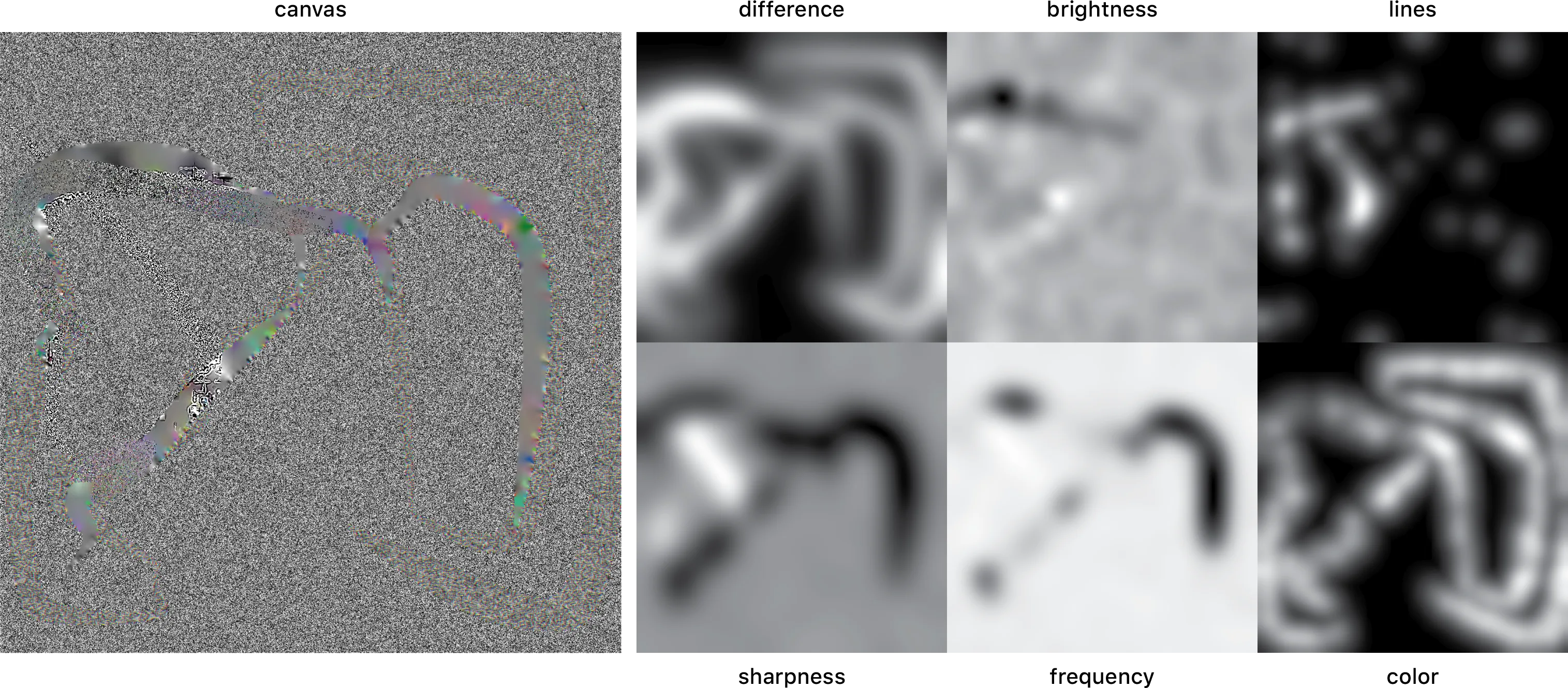

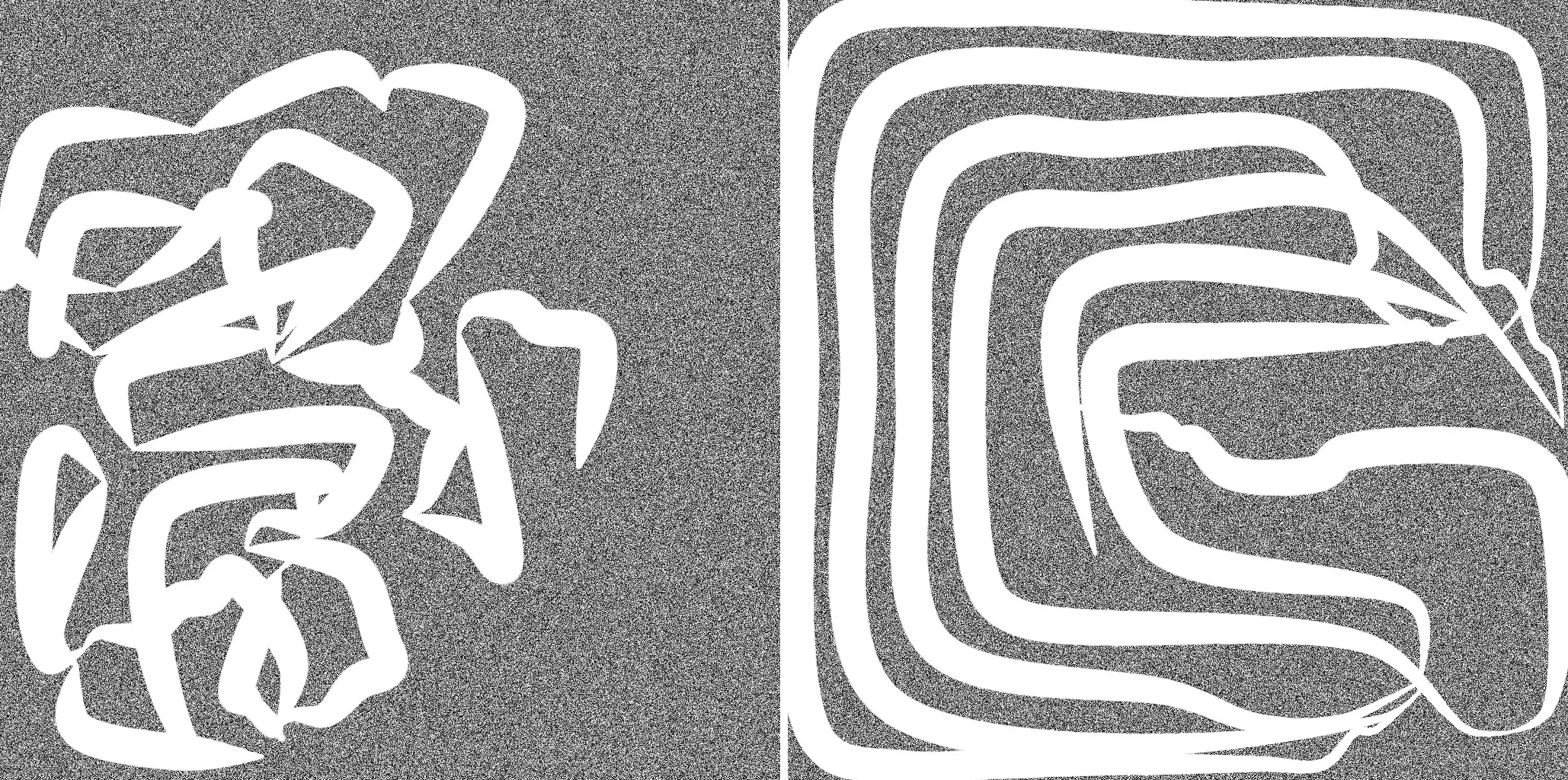

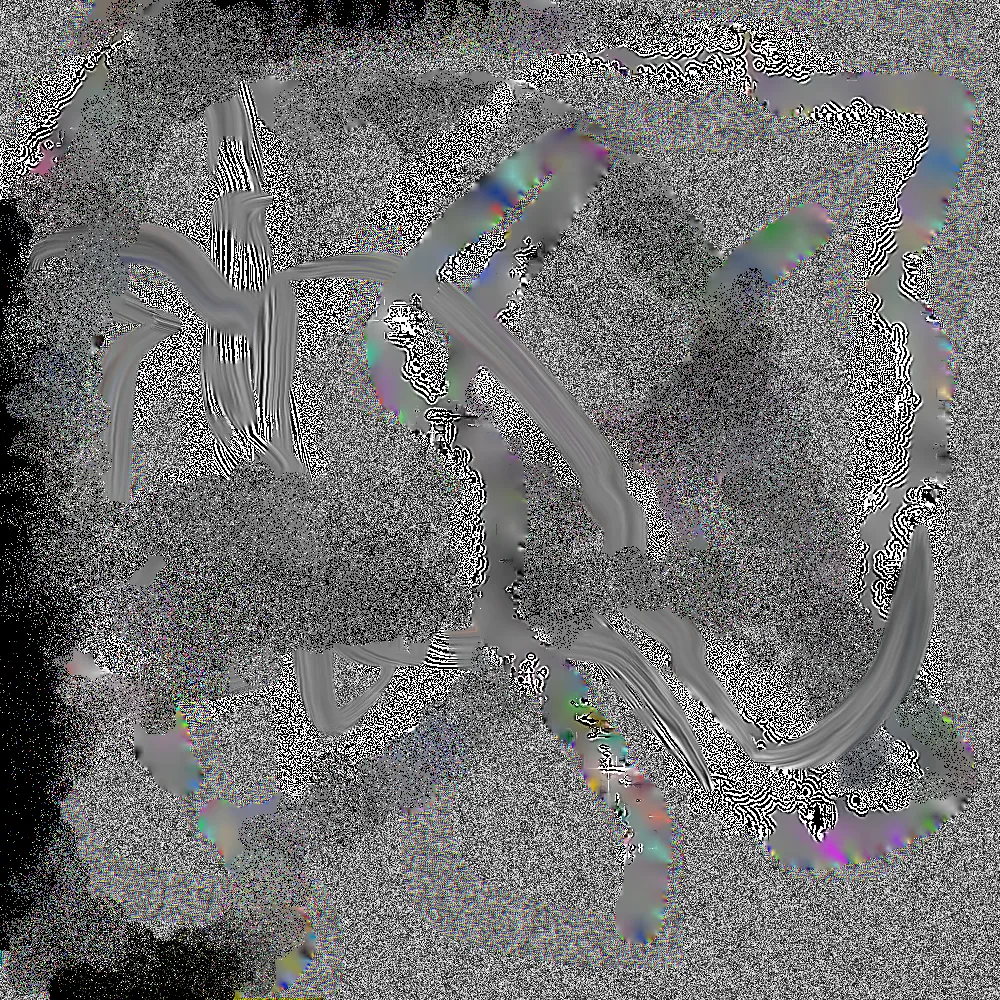

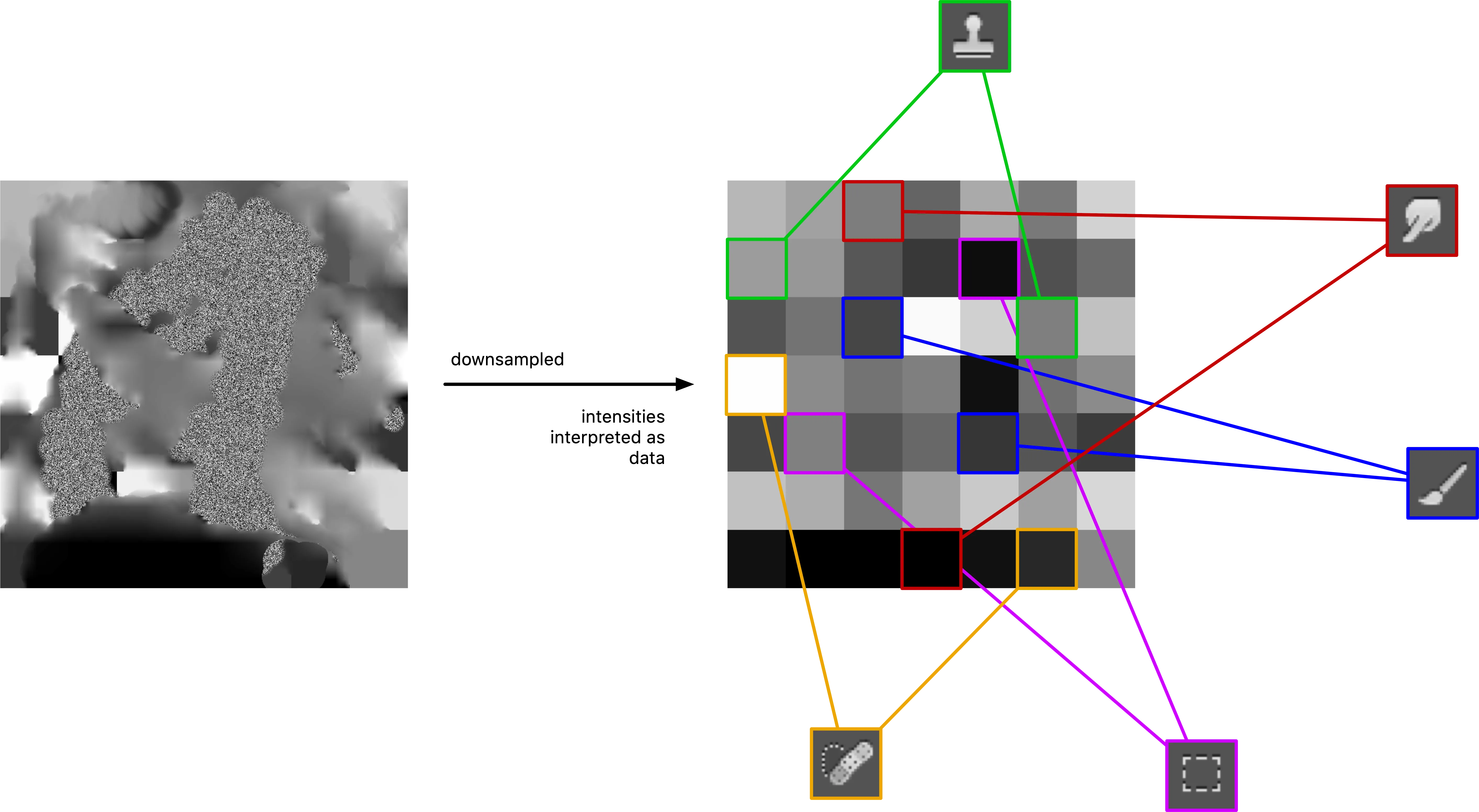

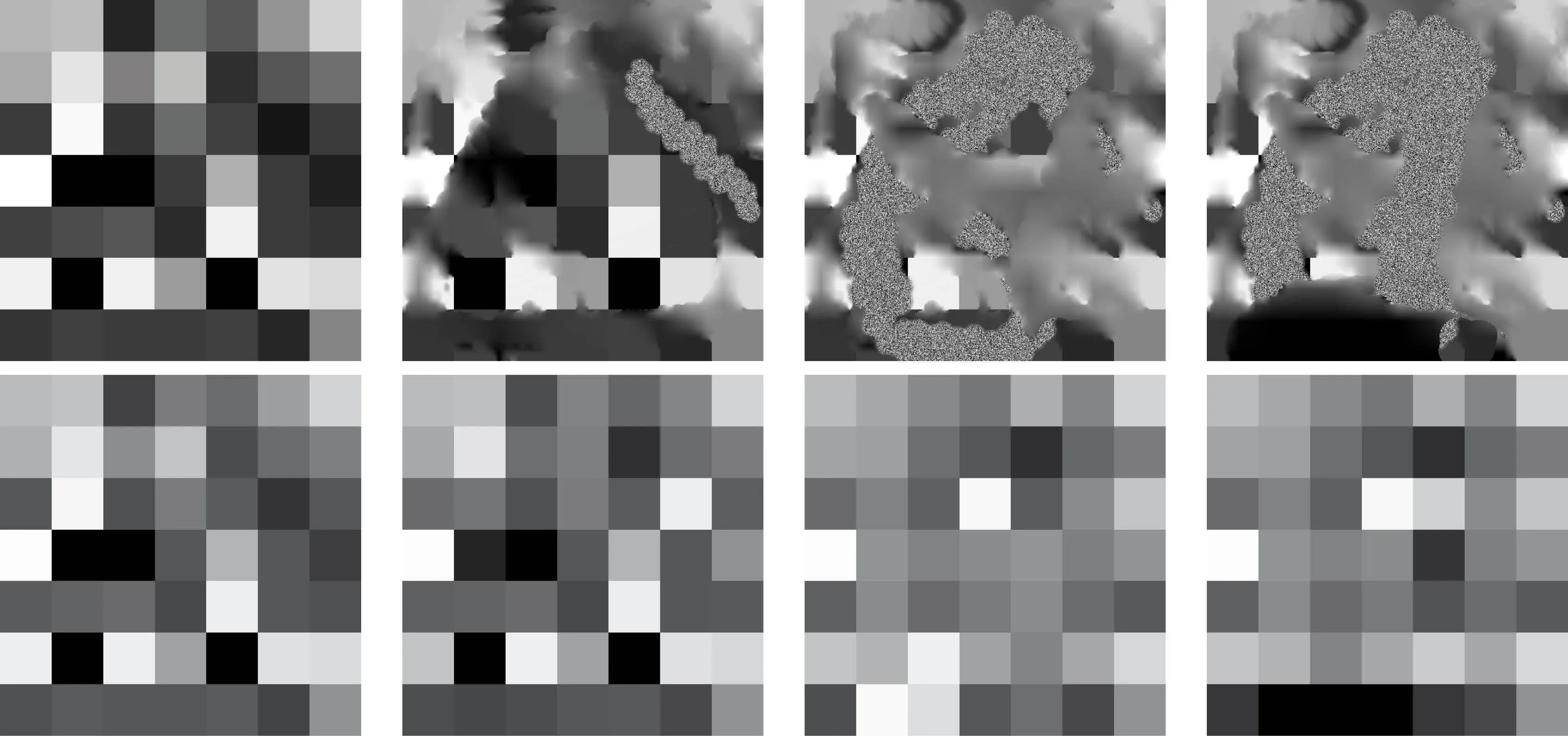

The tools represent different character classes, with different character traits. They each have different ideas about ‘how the world should look like’. The paint brush and sampler are the ones that can bring in new information, whereas the eraser on the other end of the spectrum wants to ‘undo’ any change by reverting the canvas to its original state. It wants things to go back to ‘the way things used to be’. Similarly the inpainting tool can delete parts of the image and fill it with interpolated information from the surrounding pixels. The selection tool, located in the center, can protect a region from being changed. It wants things to simply ‘stay as they’ are. The smudge and the sharpen tool are antagonists of sorts: One blurs hard edges and shifts things slightly, whereas the other wants things to be clearly defined, ideally black and white. The randomizer causes causes a bit of chaos with by scrambling the pixels it can find. The RGB shifter is special in that it can seeminlgy create color where there was no color before. The tools were deliberately selected to span a wide gamut. The space they are located in is designed to cause friction, and with the potential for interesting things to happen.

The interesting part is indeed when the agents / tools interact with one another. This can produce secondary effects in the form of new visual artifacts. For instance, the RGB shifter tool’s effect on its own may not be very noticable, but the inpainting tool can amplify the colors to a great degree. Similarly, when the sharpen tool moves over parts of the image that have been smudged or inpainted before, we can often observe a distinct black and white line pattern appearing.